Replacing Angular with Plain JavaScript Modules, and Other Page Speed Insights

In days of old, you could achieve a fairly high mobile PageSpeed Insights (PSI) score while building your website with Angular (i.e., Angular 2+, not AngularJS). However, in early 2019, their algorithm changed and they started to care more about JavaScript that runs after the initial page render (or, in technical terms, the first contentful paint, also known as the FCP). Now, your dutifully optimized site with a score of 99 might drop down to 35. And that is exactly what happened to me. In this article, I will share my journey about how I got the PSI score back to the 90’s.

Why Angular Tanked the Score

I can imagine some fans of frameworks like Angular wondering why such a framework might cause problems when it didn’t used to. While I alluded to it above, the real reasons are nuanced and can take some time to explain.

The main reason is that the FCP (first contentful paint) isn’t the only measure used by PSI to assess the speed of a webpage. Another key measurement is the TTI (time to interactive). Time to interactive essentially means how much time it takes until all assets are downloaded and all code has run, freeing up resources (like the CPU) so the user can start interacting with the full version of the page.

A lot of developers will tell you that you can achieve a very good page speed with a large framework like Angular, and that is true in some ways. If you are considering the FCP as your primary measure, that is certainly true thanks to techniques like server side rendering (SSR). While those techniques certainly help to display the initial version of the page faster, they don’t do much to improve the amount of time it takes to download and run the JavaScript. On older mobile devices on slower cell networks, that becomes hugely important. Having a quick FCP might buy you a few more seconds before users bounce away from your site, but they won’t wait forever.

Once you take into account the TTI (time to interactive), it becomes apparent that there is no real way for a framework as heavy as Angular to load a page quickly. It just takes too long to download, compile, and run the JavaScript. When I say there is “no real way”, I mean that there are ways you can potentially trick PSI, but when it comes to real users using your website, they aren’t going to fall for those tricks. I’ll revisit one of those tricks later.

Gathering the Evidence with Experiments

When proposing to stakeholders that they spend a bunch of time and money on replacing a foundational technology on their website, it’s not enough to say “we think it’s Angular slowing things down”. You have to provide proof. My favorite way of doing that is with experiments that are enabled with a feature flag.

It works like this. You create a theory (e.g., "Angular is slowing down the website”). Then you modify the code to test that theory, and you measure the results with PSI (or, if you want to do a real quick test, you can use Lighthouse, such as in the “audits” tab in Google Chrome). While you could just deploy your experiment directly to some development environment, that can be problematic.

For one, your development environment may not match your production environment, and that may significantly throw off the PSI score (e.g., you may not be compressing all the same assets, you may not be using Cloudflare, and you may be sharing resources like CPU with other websites on the development server). To get around this fact, you can hide this experiment behind a feature flag and deploy it directly to production.

There are a lot of ways of doing feature flags (e.g., web.config settings, cookies, the Konami code, and so on). My approach of choice is to use query strings to enable experiments. That way, you can see the change very easily just by tacking on a query string like “?experiment=5” or “?experiment=no-angular”.

By using query strings as feature flags to toggle experiments on and off, you also get the added benefit that you can see what happens when you combine multiple experiments. Sometimes, you may find that individual experiments may have little impact, but combining key experiments can have a huge impact.

Here are some of the experiments I’ve tried using this approach:

Disable All JavaScript This can tell you if the problem has something to do with JavaScript.

Add Sample JavaScript I used this experiment in combination with other experiments to see what the impact would be if I removed Angular and added in jQuery. This was not because I intended to use Angular; it was to test how a smaller JavaScript payload would impact page speed.

Disable Angular Rather than disabling all JavaScript, just disable Angular to see what impact that would have.

Remove Third-Party JavaScript For example, chat plugins, Google Maps, and analytics code. Maybe you won’t be able to entirely get rid of those dependencies, but maybe you can find ways to optimize them (more on this later).

Disable External Fonts If you have too many or too large of fonts, or if you’re loading them in an inefficient way, this can help you identify them as an issue.

Disable Images You can test disabling certain images (such as those that are below the fold), or you can test disabling all images on the page. This can tell you if you need to better manage images, such as by lazy loading them or by reducing their size.

Lower Image Quality Maybe images with 60% JPEG quality would be acceptable if they lower the PSI score significantly.

Add Image Crops This is another way you can reduce image size that is relatively straightforward to test. If you don’t want to fiddle with actually cropping the image for the experiment, you can hard code a path to a representative image.

Change Cache Durations What would happen if you cache images and other assets for a year rather than a week? This will tell you what PSI thinks of such a change.

Locally Host External JavaScript Some external JavaScript is easy to host locally. Others require more sophisticated approaches to reroute the requests locally. Fewer domains may improve page speed (or hurt it, if you aren’t using HTTP/2).

Server Push Assets Such as some of the JavaScript, fonts, CSS, and key images. This can help by allowing the browser to download assets before they’re requested, but it can also hurt by causing the wrong assets to take priority over more important assets.

Remove Bulky Markup If you have hundreds of kilobytes of HTML, chances are there are large sections you can experiment with removing. If the impact is large, you can consider finding alternatives (e.g., using <template> tags to keep them out of the DOM initially, or loading them with AJAX, or putting some data behind a pager).

Inline Critical Path CSS See what impact inlining the critical path CSS would have (it could help to improve the FCP by prioritizing only the CSS necessary to display the page). A tool like Critical Path CSS Generator can help to create a quick experiment.

Disable CSS Entirely While you would never do that as a fix, it can show you want impact CSS is having on your page speed, which can help inform whether or not you should spend any time finding ways to optimize it.

There are many more experiments you can do, but these were the key experiments that I needed to diagnose the speed issues I was seeing. Looking at that list, it may seem like a lot of work, and it certainly isn’t all that quick to implement. However, it’s worth keeping in mind that the experiments do not have to be as robust as the solutions you use to actually address the page speed issues. You can and should heavily cut corners for these experiments, as they are just tools to prove out theories.

Put another way, experiments just show you the maximum potential gain you can make with a specific change. They don’t need to show the exact gain to show the value of making the change. If there are still questions about the actual gain you can expect, you can create a more involved experiment to answer that question.

One other thing you might be concerned about with these experiments is normal users stumbling across them. This is generally not something you need to worry about, as gating the experiments behind a query string will prevent most genuine users from viewing them. If any malevolent users happen across them, that would also not be a problem as the experiments are pretty innocuous.

Something else you may notice about the list above is that much of it has nothing to do with Angular. This is intentional. I was trying to improve page speed, not remove Angular. These tests showed the relative value of each change I could make to the website. They also showed that the main thing that could be done to improve the page speed was to remove Angular, and they did that by showing all the other things combined not having as much of an impact as removing Angular did.

The main takeaway is that you can use experiments to relatively cheaply figure out the best course of action to improve your page speed score.

Replacing Angular with Plain JavaScript Modules

While replacing Angular with plain JavaScript would probably be enough, I wanted to go a step further to avoid any future issues. Using plain JavaScript will certainly reduce the overall amount of JavaScript used, but it may still be a sizable amount. For that reason, I opted to use JavaScript modules.

JavaScript modules are a feature that is now supported in all modern browsers (you’ll still have to bundle for IE11). The nice thing about JavaScript modules is that you only load the JavaScript on the page that you need to load. If there are other pages on the site that have complicated features, you don’t need to load that JavaScript on pages that don’t use those features.

If you’ve never heard of JavaScript modules before, here is a quick introduction. First, make sure your HTML includes JavaScript modules a bit differently than normal JavaScript:

<script src="slideshow.js" type="module"></script>

Notice the type attribute is set to “module”. This is required for JavaScript modules to function properly. Older browsers like IE11 can get an alternate bundled version of the JavaScript rather than the modular version:

<script src="bundled.js" nomodule></script>

Note the “nomodule” attribute. Browsers that support JavaScript modules will ignore any script tag with this attribute, while older browsers don’t know what it means so they’ll load the script tag normally. This is a handy technique that allows you to load JavaScript modules on modern browsers and bundled JavaScript on older browsers.

Here’s what your slideshow JavaScript module might look like (slideshow.js):

import Swipe from './swipe.js'; import Carousel from './carousel.js'; let selector = '[data-widget-slideshow]', slideshowEl = document.querySelector(selector), swipe = new Swipe(), carousel = new Carousel(slideshowEl, swipe); carousel.autoplay();

Note that this file imports two other files. Those two other files might in turn import additional files. This creates a dependency chain that modern browsers know how to resolve. This massively reduces the amount of JavaScript actually used on any given page. The downside is that the browser doesn’t know about each file up front, so it can take longer to download the full chain. However, there is a way around that, which we’ll cover next.

Preload JavaScript Module Dependencies

If slideshow.js imports swipe.js and carousel.js, and swipe.js in turn imports ios-swipe.js and android-swipe.js, and carousel.js in turn imports responsive-image.js, the browser will have to download each file in sequence rather than all of them at once. For example, in order for the browser to start downloading responsive-image.js, it will first need to download carousel.js, which it won’t know to download until it has fully downloaded slideshow.js.

To avoid this slowdown, you can preload files that you know you will need. There are a few ways to preload, but the general idea is that you give the browser a hint about what files it will need, and it can start downloading them immediately rather than once it has figured out it needs them.

One approach I tried to preloading was to use HTTP/2 server push. While HTTP/2 is certainly a must (to download multiple small JavaScript files concurrently), I found that in my case server push actually seemed to worsen the page speed. I believe this is because the browser prioritized downloading the JavaScript modules prior to other resources necessary to display the page, such as the fonts, images, and so on.

In the future, I might try doing this with a slightly more sophisticated approach to server push, such as configuring the priority level of each resource. However, given a limited timeline, this wasn’t feasible in my case. As I’ll explain later, it also was not necessary.

The approach I landed on was to insert <link rel=”preload”> tags using JavaScript. Each of these tags referred to one of the JavaScript module dependencies I knew I would need on the page. Here’s what that code looks like:

let paths = window.preloadJavaScriptPaths || [], body = document.body, element, i, path; for (i = 0; i < paths.length; i++) { path = paths[i]; element = document.createElement('link'); element.setAttribute('rel', 'preload'); element.setAttribute('as', 'script'); element.setAttribute('crossorigin', 'anonymous'); element.setAttribute('href', path); body.appendChild(element); }

This loads all the paths to the JavaScript modules from an array, loops through each of them, and creates a <link rel=”preload”> tag for each of them. I chose this approach over including <link rel=”preload”> tags directly in the markup because that approach still seemed to prematurely load the JavaScript (i.e., it loaded the JavaScript too fast).

It may seem counterintuitive that you could load the JavaScript for the page too fast, so I’ll explain that in the next section. However, you are probably curious how you might go about figuring out which JavaScript files to preload in the first place.

Due to time constraints, the approach I took to figuring out which JavaScript modules to preload was to hard code a list of files for each widget. While not the most elegant solution, it got the job done.

If I were to imagine a more elegant approach, I would be inclined to rely on a Node module to figure out the dependency graph for me. Luckily, there is a node module that does just that, and it’s called dependency-tree.

The idea is that you’d use dependency-tree to figure out all the dependencies for each of your widgets. You could create a build step that stores those dependencies to a file, then you can use that file at runtime to decide which JavaScript files to preload.

You could do this either by having your build process construct a JavaScript file that is aware of the dependencies for each widget, then have that JavaScript only load the dependencies required by the widgets actually used on the page. Or, you could have the backend code manage that and it would just be responsible for populating the window.preloadJavaScriptPaths array from the above code snippet.

If you don’t want to fiddle with manually managing JavaScript module dependencies, dependency-tree could be a good option for you.

Deferring Execution of JavaScript Until First User Interaction

One of the factors that PSI uses to assess the speed of your page is the Time To Interactive (TTI). This metric waits until the JavaScript has executed and attached event listeners to the visible DOM nodes. This means that, even though the page may be fully displayed, PSI will still ding you based on how long it takes for your JavaScript to load and execute.

While I only had a very small amount of JavaScript to run, other assets on the page meant that either this JavaScript was being delayed or other JavaScript (e.g., Google Tag Manager and other analytics scripts) was being delayed as a result of this JavaScript loading. This negatively impacted the TTI.

The way I found around this was to delay the execution of the JavaScript until first user interaction (e.g., first key press, first mouse movement, first tap, first scroll, etc.). That way, the JavaScript can download in the background and it will soon be ready for the user, but it won’t run right away, which means it won’t negatively impact the TTI.

As one example of how that works, I capture the first click event on the page, start running the JavaScript, then once the JavaScript has loaded I replay that click event on the original element that was clicked before the JavaScript was loaded:

if (window.deferredClick) { setTimeout(function () { window.deferredClick.element.blur(); window.deferredClick.element.focus(); window.deferredClick.element.click(); window.deferredClick = null; }); }

Replaying events can get a bit convoluted, which you can see a bit of in the above code snippet. Rather than just replay the click event, I first have to unfocus, refocus, then replay the click. In my case, this was due to different browsers handling focus events differently, which was interfering with some accessibility features that the main navigation relied upon. This attempts to replicate what the focus events might have looked like if this were a natural click event rather than a replayed click event.

One gotcha to keep in mind is that this trick may obscure your true page speed score. While PSI gives you an estimated score, the real score is how your users interpret interacting with your website. If you have a megabyte of JavaScript that you defer loading using this approach, PSI might think you have a fantastic score, but in reality mobile users could be waiting 10, 20, or 30 seconds before they can interact with the page. In my case, I had about 20KB of JavaScript to load, which only takes a second or two on a very slow mobile network, and only a small fraction of a second to execute.

The second or two it takes for the page to become interactive should be about equivalent to the amount of time between when the page first displays and when the user first interacts with the page. If they do interact with the page before the JavaScript has loaded, they will probably only execute a single interaction event, which is fine since your JavaScript will then replay that event once it has fully loaded.

One more gotcha I had to be mindful of in my case is that certain features of the site required JavaScript in order to display. As luck would have it, those features didn’t display above the fold on mobile. However, they did display above the fold on desktop. My workaround for that was to utilize an intersection observer to detect if that feature was visible above the fold and, if so, load the JavaScript immediately.

That was fine in my case since that would only happen on a desktop browser, and desktop browsers tend to have both a fast CPU and faster network connections than mobile devices. Another workaround to this particular issue would be to server side render any components that are visible above the fold, in which case the JavaScript isn’t necessary to render the first displayed version of the page.

Additional Ways I Improved Page Speed

Reducing the JavaScript by a couple orders of magnitude will do wonders to improve your page speed, but that wasn’t the end of my journey to improving page speed. In this section, I’ll cover a few additional ways I improved page speed (aside from replacing Angular with plain JavaScript), as well as some further ideas I have seen work well on other websites.

Page Speed Improvement: Defer Loading Images

At first, I lazy loaded images that weren’t on the screen using an intersection observer. However, I found that once I deferred JavaScript execution, lazy loading images until right before they appeared in the viewport wasn’t all that necessary.

Instead, I initially only rendered the images that were necessary, then the deferred JavaScript inserted the remaining images once it loaded. This helped to ensure the right resources were loaded in the right order (i.e., HTML, CSS, initial images, JavaScript, then remaining images), while also reducing complexity.

As an honorable mention related to images, I was able to shave off a few bytes by cropping and lowering the image quality of some of the images. In my case, I used ImageProcessor.Web, but most platforms have some sort of tool to handle this sort of image optimization automation.

Page Speed Improvement: Remove Third-Party JavaScript

Removing third-party JavaScript can be a challenge. Not only do some third-party services require JavaScript, but it can sometimes be unclear where the JavaScript is coming from or what its exact purpose is. This is especially true of the analytics JavaScript.

Since we were loading hundreds of kilobytes of analytics JavaScript, I was lucky enough to be able to lean on a colleague, who did and audit and found that some of the analytics JavaScript could simply be removed without impacting the client’s business.

In certain other cases, I was able to delay loading of third-party JavaScript until after the initial JavaScript loaded. For example, a particularly JavaScript heavy chat plugin could be deferred since the chat window wasn’t initially displayed. I did have to jump through some hoops to ensure the chat window would open if the first click was on the chat link, but it was worth deferring JavaScript that wasn’t initially necessary to run the page.

Page Speed Improvement: Change How Assets are Served

While I wasn’t able to use HTTP/2 server push to push all the assets I would like (e.g., the JavaScript), I was able to push the Icomoon icon font before it was requested by the browser. This helped to display the icons on the page sooner than they would otherwise display, though it did seem to delay the FCP slightly (perhaps because it was downloading alongside the HTML and CSS, thus slowing them down).

Given a bit more time, my preference would be to use SVG images for icons rather than an icon font (luckily, Icomoon supports exporting to SVG), and potentially also embedding some of the smaller SVG’s directly in the CSS. Something like postcss-inline-svg should do the trick.

Page Speed Improvement: Remove Bulky Markup

I found that some of the pages had hundreds of kilobytes of HTML, and with a bit of effort I was able to remove some of this from the page. One really neat trick I learned was to use the <template> tag to ensure the browser ignores parts of the markup when rendering the page.

The interesting thing about the <template> tag is that the markup is still in the DOM, but the browser ignores those sections of the DOM when doing things like styling elements, which reduces the amount of CPU and GPU time necessary to render the page. In my case, I was able to put complex portions of the main navigation and the entire footer in <template> tags, then only load them right when they’re needed.

I also found that there were large lists of data displayed on the page. This was resolved by using a pager to display a small portion of the list on the initial load, then show the rest of the list if a user clicks a button. This simple technique had a surprising impact on the page speed.

One thing I would like to look into the future is parsing the JSON-LD script element on the page. One of the lists of markup on the page had essentially the same data is the JSON-LD script. One option would be to use the microdata format to include the schema information directly in the markup, though Google seems to prefer the JSON-LD format, not to mention the microdata format is more bulky and more confusing than the JSON-LD format. With a bit of parsing, it should be possible to use the JSON-LD data to construct portions of the markup with JavaScript.

Page Speed Improvement: Componentize CSS

Back in the days before HTTP/2, it was almost necessary to bundle all your CSS into a single file to avoid multiple round trips to the server. Another approach to load the CSS more quickly is to inline the critical path CSS (i.e., the CSS required to display the visible content above the fold).

Another approach I’ve seen used recently is to componentize the CSS so that you only serve the CSS necessary to display the components on the page. This can drastically reduce the amount of CSS you need on the page. Supposing your pages are built using components, it makes a lot of sense to use this approach.

All this really means is to create one CSS file per component (e.g., with Webpack, Grunt, or Gulp, assuming you are starting with Sass), then have your backend only include the CSS files on a given page for the components that are part of that page. Thanks to HTTP/2, there is virtually no performance penalty for splitting your CSS into several files.

Page Speed Improvement: Locally Host Google Fonts

Google fonts can negatively impact page speed due to the fact that several round trips are required to download them. Also, since they are hosted on an external domain, the removes some possibilities, such as using HTTP/2 server push and preloading (you can’t preload it because you don’t know the filename right away).

You can use a tool like Google Webfonts Helper to download a Google font and host it locally on your site.

Some Things You Don’t Need Angular / AngularJS / React / Vue For

Some developers want to use a framework because it seems to make life easier. That may be true some of the time. If you are building a marketing website with mostly static features, you might want to reevaluate your position and ensure you are balancing page speed against the perceived efficiency gains you expect from a framework, especially one as beefy as Angular.

Here are some things I found I didn’t need Angular for (and by extension, the other popular frameworks).

You Don’t Need Angular For: HTML Templates & Binding

If you need to construct some DOM elements with JavaScript, you have a few options. One would be to render them as markup (perhaps in a <template> tag), then have the JavaScript store those in memory. Another option would be to compile template literals to document fragments, like this:

let name = prompt("What is your name?"); let fragment = document.createRange().createContextualFragment(` <p> Hello, ${name}, how are you? </p> `);

If you like having some sort of tool to do this, you might want to consider a very small HTML templating tool, such as Dompiler. This is a tool I built to show that you don’t need much JavaScript to have nice HTML templating (it’s 1KB once compressed). Here’s what that might look like:

let compiled = compile(` <li class="grid__item"> <h2 ${namedElement("Header")} class="grid__item__header"> ${item.name} </h2> <p ${namedElement("Bio")} class="grid__item__bio"> ${item.bio} </p> </li> `);

That was taken from this example: Dompiler Modular Grid Example

You can do all the normal things you’d expect with this approach (dynamic values, looping, conditional sections, and so on).

Similarly, you probably don’t need data binding. Instead, you can listen for change/click/input events, then re-render sections of markup based on that updated value. The main thing you’ll want to do here to maintain your sanity is to have a central data model, then update the markup based on that model. Here are some examples of how to do that (I’m using Dompiler, but this is also fairly simple with purely vanilla JavaScript):

Although I have no personal experience with it, I also like the things I’ve heard about Svelte, which supposedly avoids bloating the page because it compiles directly to vanilla JavaScript (rather than relying on a huge library of JavaScript functions). If you have go use a library, hopefully it’s one that has a tiny JavaScript footprint.

You Don’t Need Angular For: Animated Elements

You can build an animated element with CSS and some very simple JavaScript. One example would be a slideshow or a carousel. These may have been a challenge to create years ago, but today they are trivially easy to create.

You might also be tempted to use a library like GreenSock, but I would again suggest comparing the page speed cost of an animation library against the perceived gain in efficiency. You can do an awful lot with CSS, and in particular with CSS animations.

Key Takeaways

There are a few things I hope you keep in mind now that you’ve made it all the way to the end of this article:

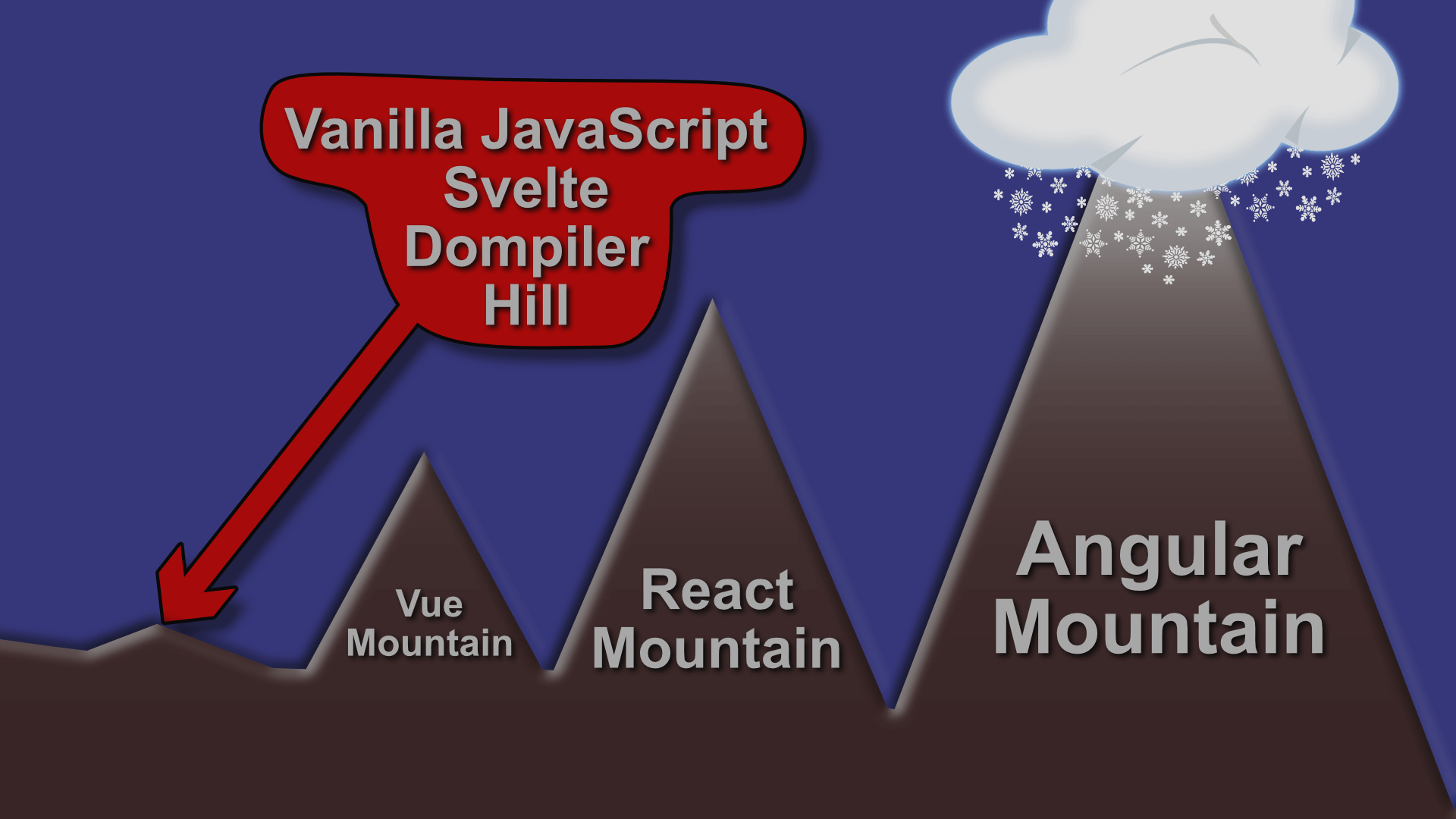

Reconsider Frameworks Frameworks like Angular aren’t always necessary and shouldn’t be the default. The list of things you don’t need them for would be very long (modern browsers do quite a lot these days). If you do feel you need one, choose one with a small footprint, like Svelte or Dompiler.

Componentize Everything If you componentize your CSS and JavaScript, you only need to include the bare minimum on any given page for it to work.

Measure Early and Often Use tools like PageSpeed Insights and Lighthouse to measure page speed early and often. It is much easier to change a technology at the start of a project than it is at the end of a project. Use experiments via feature flags rather than guessing at the cause of a slowdown.

Be Lazy Load things only once you need them, or at least not right away. Prioritize the most essential resources, and load the rest after that.

Server Side Rendering Won’t Save You Lots of the popular frameworks claim that server side rendering will boost page speed. Certainly, it will improve FCP. However, it may not do much to improve the TTI (true TTI, not just TTI as measured by a tool like PSI).

Irony You may notice this article has nearly 1MB of JavaScript (a bit much for an image, some code snippets, and text). The irony is not lost on me. It seems that Squarespace, the platform this website is hosted on, could take a few hints from this article.